生活的意义就是学着真实的活下去,生命的意义就是寻找生活的意义 -----山河已无恙

Pod健康检查和服务可用性检查健康检查的目的探测的目的: 用来维持 pod的健壮性,当pod挂掉之后,deployment会生成新的pod,但如果pod是正常运行的,但pod里面出了问题,此时deployment是监测不到的。故此需要探测(probe)-pod是不是正常提供服务的

探针类似Kubernetes 对 Pod 的健康状态可以通过两类探针来检查:LivenessProbe和ReadinessProbe, kubelet定期执行这两类探针来诊断容器的健康状况。都是通过deployment实现的

探针类型描述LivenessProbe探针用于判断容器是否存活(Running状态) ,如果LivenessProbe探针探测到容器不健康,则kubelet将杀掉该容器,并根据容器的重启策略做相应的处理。如果一个容器不包含LivenesspProbe探针,那么kubelet认为该容器的LivenessProbe探针返回的值永远是Success。ReadinessProbe探针用于判断容器服务是否可用(Ready状态) ,达到Ready状态的Pod才可以接收请求。对于被Service管理的Pod, Service与Pod Endpoint的关联关系也将基于Pod是否Ready进行设置。如果在运行过程中Ready状态变为False,则系统自动将其从Service的后端Endpoint列表中隔离出去,后续再把恢复到Ready状态的Pod加回后端Endpoint列表。这样就能保证客户端在访问Service时不会被转发到服务不可用的Pod实例上。 检测方式及参数配置LivenessProbe和ReadinessProbe均可配置以下三种实现方式。

方式描述ExecAction在容器内部执行一个命令,如果该命令的返回码为0,则表明容器健康。TCPSocketAction通过容器的IP地址和端口号执行TC检查,如果能够建立TCP连接,则表明容器健康。HTTPGetAction通过容器的IP地址、端口号及路径调用HTTP Get方法,如果响应的状态码大于等于200且小于400,则认为容器健康。对于每种探测方式,需要设置initialDelaySeconds和timeoutSeconds等参数,它们的含义分别如下。

参数描述initialDelaySeconds:启动容器后进行首次健康检查的等待时间,单位为s。timeoutSeconds:健康检查发送请求后等待响应的超时时间,单位为s。当超时发生时, kubelet会认为容器已经无法提供服务,将会重启该容器。periodSeconds执行探测的频率,默认是10秒,最小1秒。successThreshold探测失败后,最少连续探测成功多少次才被认定为成功,默认是1,对于liveness必须是1,最小值是1。failureThreshold当 Pod 启动了并且探测到失败,Kubernetes 的重试次数。存活探测情况下的放弃就意味着重新启动容器。就绪探测情况下的放弃 Pod 会被打上未就绪的标签。默认值是 3。最小值是 1Kubernetes的ReadinessProbe机制可能无法满足某些复杂应用对容器内服务可用状态的判断

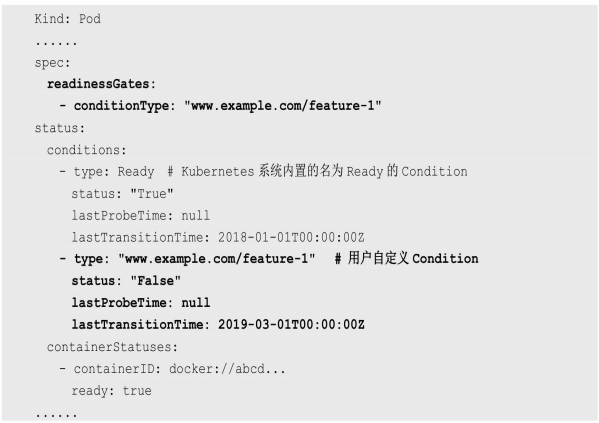

所以Kubernetes从1.11版本开始,引入PodReady++特性对Readiness探测机制进行扩展,在1.14版本时达到GA稳定版,称其为Pod Readiness Gates。

通过Pod Readiness Gates机制,用户可以将自定义的ReadinessProbe探测方式设置在Pod上,辅助Kubernetes设置Pod何时达到服务可用状态(Ready) 。为了使自定义的ReadinessProbe生效,用户需要提供一个外部的控制器(Controller)来设置相应的Condition状态。

Pod的Readiness Gates在Pod定义中的ReadinessGate字段进行设置。下面的例子设置了一个类型为www.example.com/feature-1的新ReadinessGate:

– 新增的自定义Condition的状态(status)将由用户自定义的外部控·制器设置,默认值为False. Kubernetes将在判断全部readinessGates条件都为True时,才设置Pod为服务可用状态(Ready为True) 。这个不是太懂,需要以后再研究下 学习环境准备 ┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$mkdir liveness-probe┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$cd liveness-probe/┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl create ns liveness-probenamespace/liveness-probe created┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl config current-contextkubernetes-admin@kubernetes┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl config set-context $(kubectl config current-context) --namespace=liveness-probeContext "kubernetes-admin@kubernetes" modified. LivenessProbe探针

新增的自定义Condition的状态(status)将由用户自定义的外部控·制器设置,默认值为False. Kubernetes将在判断全部readinessGates条件都为True时,才设置Pod为服务可用状态(Ready为True) 。这个不是太懂,需要以后再研究下 学习环境准备 ┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$mkdir liveness-probe┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$cd liveness-probe/┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl create ns liveness-probenamespace/liveness-probe created┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl config current-contextkubernetes-admin@kubernetes┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl config set-context $(kubectl config current-context) --namespace=liveness-probeContext "kubernetes-admin@kubernetes" modified. LivenessProbe探针 用于判断容器是否存活(Running状态) ,如果LivenessProbe探针探测到容器不健康,则kubelet将杀掉该容器,并根据容器的重启策略做相应的处理

ExecAction方式:command在容器内部执行一个命令,如果该命令的返回码为0,则表明容器健康。

资源文件定义

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$cat liveness-probe.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: pod-liveness name: pod-livenessspec: containers: - args:- /bin/sh- -c- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; slee 10livenessProbe: exec:command:- cat- /tmp/healthy initialDelaySeconds: 5 #容器启动的5s内不监测 periodSeconds: 5 #每5s钟检测一次image: busyboximagePullPolicy: IfNotPresentname: pod-livenessresources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {}运行这个deploy。当pod创建成功后,新建文件,并睡眠30s,删掉文件在睡眠。使用liveness检测文件的存在

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl apply -f liveness-probe.yamlpod/pod-liveness created┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get podsNAMEREADYSTATUSRESTARTS AGEpod-liveness1/1 Running1 (8s ago)41s# 30文件没有重启运行超过30s后。文件被删除,所以被健康检测命中,pod根据重启策略重启

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get podsNAMEREADYSTATUSRESTARTS AGEpod-liveness1/1 Running2 (34s ago)99s99s后已经从起了第二次

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m shell -a "docker ps | grep pod-liveness"192.168.26.83 | CHANGED | rc=0 >>00f4182c014e7138284460ff"/bin/sh -c 'touch /…"6 seconds agoUp 5 seconds k8s_pod-liveness_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_001c5cfa02d8cregistry.aliyuncs.com/google_containers/pause:3.5"/pause" 7 seconds agoUp 6 seconds k8s_POD_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_0┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl get podsNAMEREADYSTATUSRESTARTSAGEpod-liveness1/1 Running0 25s┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl get podsNAMEREADYSTATUSRESTARTS AGEpod-liveness1/1 Running1 (12s ago)44s┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m shell -a "docker ps | grep pod-liveness"192.168.26.83 | CHANGED | rc=0 >>1eafd7e8a12a7138284460ff"/bin/sh -c 'touch /…"15 seconds agoUp 14 seconds k8s_pod-liveness_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_101c5cfa02d8cregistry.aliyuncs.com/google_containers/pause:3.5"/pause" 47 seconds agoUp 47 seconds k8s_POD_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_0┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$查看节点机docker中的容器ID,前后不一样,确定是POD被杀掉后重启。

HTTPGetAction的方式通过容器的IP地址、端口号及路径调用HTTP Get方法,如果响应的状态码大于等于200且小于400,则认为容器健康。创建资源文件,即相关参数使用

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$cat liveness-probe-http.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: pod-livenss-probe name: pod-livenss-probespec: containers: - image: nginximagePullPolicy: IfNotPresentname: pod-livenss-probelivenessProbe: failureThreshold: 3 #当 Pod 启动了并且探测到失败,Kubernetes 的重试次数 httpGet:path: /index.htmlport: 80scheme: HTTP initialDelaySeconds: 10 #容器启动后第一次执行探测是需要等待多少秒 periodSeconds: 10#执行探测的频率,默认是10秒,最小1秒 successThreshold: 1 #探测失败后,最少连续探测成功多少次才被认定为成功 timeoutSeconds: 10 #探测超时时间,默认1秒,最小1秒resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}运行deploy,这个的探测机制访问Ngixn的默认欢迎页

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$vim liveness-probe-http.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl apply -f liveness-probe-http.yamlpod/pod-livenss-probe created┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get podsNAMEREADYSTATUSRESTARTSAGEpod-livenss-probe1/1 Running0 15s┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it pod-livenss-probe -- rm /usr/share/nginx/html/index.html┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get podsNAMEREADYSTATUSRESTARTS AGEpod-livenss-probe1/1 Running1 (1s ago)2m31s当欢迎页被删除时,访问报错,被检测命中,pod重启

TCPSocketAction方式通过容器的IP地址和端口号执行TCP检查,如果能够建立TCP连接,则表明容器健康。资源文件定义

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$cat liveness-probe-tcp.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: pod-livenss-probe name: pod-livenss-probespec: containers: - image: nginximagePullPolicy: IfNotPresentname: pod-livenss-probelivenessProbe: failureThreshold: 3 tcpSocket:port: 8080 initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}访问8080端口,但是8080端口未开放,所以访问会超时,不能建立连接,命中检测,重启Pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl apply -f liveness-probe-tcp.yamlpod/pod-livenss-probe created┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get podsNAMEREADYSTATUSRESTARTSAGEpod-livenss-probe1/1 Running0 8s┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get podsNAMEREADYSTATUSRESTARTS AGEpod-livenss-probe1/1 Running1 (4s ago)44s┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$ ReadinessProbe探针用于判断容器服务是否可用(Ready状态) ,达到Ready状态的Pod才可以接收请求。负责不能进行访问

ExecAction方式:command资源文件定义,使用钩子建好需要检查的文件

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$cat readiness-probe.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: pod-liveness name: pod-livenessspec: containers: - readinessProbe: exec:command:- cat- /tmp/healthy initialDelaySeconds: 5 #容器启动的5s内不监测 periodSeconds: 5 #每5s钟检测一次image: nginximagePullPolicy: IfNotPresentname: pod-livenessresources: {}lifecycle: postStart:exec: command: ["/bin/sh", "-c","touch /tmp/healthy"] dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}创建3个有Ngixn的pod,通过POD创建一个SVC做测试用

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$sed 's/pod-liveness/pod-liveness-1/' readiness-probe.yaml | kubectl apply -f -pod/pod-liveness-1 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$sed 's/pod-liveness/pod-liveness-2/' readiness-probe.yaml | kubectl apply -f -pod/pod-liveness-2 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get pods -o wideNAME READYSTATUSRESTARTSAGEIP NODE NOMINATED NODEREADINESS GATESpod-liveness 1/1 Running0 3m1s10.244.70.50vms83.liruilongs.github.io pod-liveness-11/1 Running0 2m 10.244.70.51vms83.liruilongs.github.io pod-liveness-21/1 Running0 111s10.244.70.52vms83.liruilongs.github.io修改主页文字

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$serve=pod-liveness┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it $serve -- sh -c "cat /usr/share/nginx/html/index.html"pod-liveness┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$serve=pod-liveness-1┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$serve=pod-liveness-2┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$修改标签

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get pods --show-labelsNAME READYSTATUSRESTARTSAGELABELSpod-liveness 1/1 Running0 15mrun=pod-livenesspod-liveness-11/1 Running0 14mrun=pod-liveness-1pod-liveness-21/1 Running0 14mrun=pod-liveness-2┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl edit pods pod-liveness-1pod/pod-liveness-1 edited┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl edit pods pod-liveness-2pod/pod-liveness-2 edited┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get pods --show-labelsNAME READYSTATUSRESTARTSAGELABELSpod-liveness 1/1 Running0 17mrun=pod-livenesspod-liveness-11/1 Running0 16mrun=pod-livenesspod-liveness-21/1 Running0 16mrun=pod-liveness┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$要删除文件检测

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it pod-liveness -- ls /tmp/healthy┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it pod-liveness-1 -- ls /tmp/healthy使用POD创建SVC

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl expose --name=svc pod pod-liveness --port=80service/svc exposed┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get epNAMEENDPOINTS AGEsvc10.244.70.50:80,10.244.70.51:80,10.244.70.52:8016s┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get svcNAMETYPECLUSTER-IPEXTERNAL-IPPORT(S)AGEsvcClusterIP10.104.246.12180/TCP36s┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get pods -o wideNAME READYSTATUSRESTARTSAGEIP NODE NOMINATED NODEREADINESS GATESpod-liveness 1/1 Running0 24m10.244.70.50vms83.liruilongs.github.io pod-liveness-11/1 Running0 23m10.244.70.51vms83.liruilongs.github.io pod-liveness-21/1 Running0 23m10.244.70.52vms83.liruilongs.github.io测试SVC正常,三个POD会正常 负载

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$while true; do curl 10.104.246.121 ; sleep 1> donepod-livenesspod-liveness-2pod-livenesspod-liveness-1pod-liveness-2^C删除文件测试

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl exec -it pod-liveness -- rm -rf /tmp/┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl exec -it pod-liveness -- ls /tmp/ls: cannot access '/tmp/': No such file or directorycommand terminated with exit code 2┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$while true; do curl 10.104.246.121 ; sleep 1; donepod-liveness-2pod-liveness-2pod-liveness-2pod-liveness-1pod-liveness-2pod-liveness-2pod-liveness-1^C会发现pod-liveness的pod已经不提供服务了

kubeadm 中的一些健康检测kube-apiserver.yaml中的使用,两种探针同时使用

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep -A 8 readireadinessProbe: failureThreshold: 3 httpGet:host: 192.168.26.81path: /readyzport: 6443scheme: HTTPS periodSeconds: 1 timeoutSeconds: 15┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep -A 9 livenesslivenessProbe: failureThreshold: 8 httpGet:host: 192.168.26.81path: /livezport: 6443scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ job&cronjob Job:批处理调度Kubernetes从1.2版本开始支持批处理类型的应用,我们可以通过Kubernetes Job资源对象来定义并启动一个批处理任务。

批处理任务通常并行(或者串行)启动多个计算进程去处理一批工作项(work item)处理完成后,整个批处理任务结束。

K8s官网中这样描述:Job 会创建一个或者多个 Pods,并将继续重试 Pods 的执行,直到指定数量的 Pods 成功终止。 随着 Pods 成功结束,Job 跟踪记录成功完成的 Pods 个数。 当数量达到指定的成功个数阈值时,任务(即 Job)结束。 删除 Job 的操作会清除所创建的全部 Pods。 挂起 Job 的操作会删除 Job 的所有活跃 Pod,直到 Job 被再次恢复执行。

一种简单的使用场景下,你会创建一个 Job 对象以便以一种可靠的方式运行某 Pod 直到完成。 当第一个 Pod 失败或者被删除(比如因为节点硬件失效或者重启)时,Job 对象会启动一个新的 Pod。也可以使用 Job 以并行的方式运行多个 Pod。

考虑到批处理的并行问题, Kubernetes将Job分以下三种类型。

类型描述Non-parallel Jobs通常一个Job只启动一个Pod,除非Pod异常,才会重启该Pod,一旦此Pod正常结束, Job将结束。Parallel Jobs with a fixed completion count并行Job会启动多个Pod,此时需要设定Job的.spec.completions参数为一个正数,当正常结束的Pod数量达至此参数设定的值后, Job结束。此外, Job的.spec.parallelism参数用来控制并行度,即同时启动几个Job来处理Work Item.Parallel Jobs with a work queue任务队列方式的并行Job需要一个独立的Queue, Work item都在一个Queue中存放,不能设置Job的.spec.completions参数,此时Job有以下特性。每个Pod都能独立判断和决定是否还有任务项需要处理。如果某个Pod正常结束,则Job不会再启动新的Pod.如果一个Pod成功结束,则此时应该不存在其他Pod还在工作的情况,它们应该都处于即将结束、退出的状态。如果所有Pod都结束了,且至少有一个Pod成功结束,则整个Job成功结束。嗯,我们就第一个,第二搞一个Demo,第三中之后有时间搞,其实就是资源配置参数的问题环境准备

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl config set-context $(kubectl config current-context) --namespace=liruiling-job-createContext "kubernetes-admin@kubernetes" modified.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl create ns liruiling-job-createnamespace/liruiling-job-create created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$vim myjob.yaml 创建一个job创建一个Job,执行echo "hello jobs"

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$cat myjob.yamlapiVersion: batch/v1kind: Jobmetadata: creationTimestamp: null name: my-jobspec: template:metadata: creationTimestamp: nullspec: containers: - command:- sh- -c- echo "hello jobs"- sleep 15image: busyboxname: my-jobresources: {} restartPolicy: Neverstatus: {} ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl apply -f myjob.yamljob.batch/my-job created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNAME READYSTATUS RESTARTSAGEmy-job--1-jdzqd0/1 ContainerCreating0 7s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobsNAME COMPLETIONSDURATIONAGEmy-job0/117s17s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNAME READYSTATUS RESTARTSAGEmy-job--1-jdzqd0/1 Completed0 24sSTATUS 状态变成 Completed意味着执行成功,查看日志

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobsNAME COMPLETIONSDURATIONAGEmy-job1/119s46s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl logs my-job--1-jdzqdhello jobs┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$ job的配置参数解析job的restart策略

restartPolicy: NeverNerver : 只要任务没有完成,则是新创建pod运行,直到job完成 会产生多个podOnFailure : 只要pod没有完成,则会重启pod,直到job完成activeDeadlineSeconds:最大可以运行时间

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl explain jobs.spec | grep actactiveDeadlineSeconds may be continuously active before the system tries to terminate it; value given time. The actual number of pods running in steady state will be less false to true), the Job controller will delete all active Pods associated┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$vim myjobact.yaml使用activeDeadlineSeconds:最大可以运行时间创建一个job

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$cat myjobact.yamlapiVersion: batch/v1kind: Jobmetadata: creationTimestamp: null name: my-jobspec: template:metadata: creationTimestamp: nullspec: activeDeadlineSeconds: 5 #最大可以运行时间 containers: - command:- sh- -c- echo "hello jobs"- sleep 15image: busyboxname: my-jobresources: {} restartPolicy: Neverstatus: {}超过5秒任务没有完成,所以从新创建一个pod运行

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl delete -f myjob.yamljob.batch "my-job" deleted┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl apply -f myjobact.yamljob.batch/my-job created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNAME READYSTATUS RESTARTSAGEmy-job--1-ddhbj0/1 ContainerCreating0 7s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobsNAME COMPLETIONSDURATIONAGEmy-job0/116s16s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNAME READYSTATUS RESTARTSAGEmy-job--1-ddhbj0/1 Completed0 23smy-job--1-mzw2p0/1 ContainerCreating0 3s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNAME READYSTATUS RESTARTSAGEmy-job--1-ddhbj0/1 Completed0 48smy-job--1-mzw2p0/1 Completed0 28s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobsNAME COMPLETIONSDURATIONAGEmy-job0/155s55s其他的一些参数

parallelism: N 一次性运行N个podcompletions: M job结束需要成功运行的Pod个数,即状态为Completed的pod数backoffLimit: N 如果job失败,则重试几次parallelism:一次性运行几个pod,这个值不会超过completions的值。

创建一个并行多任务的Job apiVersion: batch/v1kind: Jobmetadata: creationTimestamp: null name: my-jobspec: backoffLimit: 6 #重试次数 completions: 6 # 运行几次 parallelism: 2 # 一次运行几个 template:metadata: creationTimestamp: nullspec: containers: - command:- sh- -c- echo "hello jobs"- sleep 15image: busyboxname: my-jobresources: {} restartPolicy: Neverstatus: {}创建一个有参数的job

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl apply -f myjob-parma.yamljob.batch/my-job created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get pods jobsError from server (NotFound): pods "jobs" not found┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get pods jobError from server (NotFound): pods "job" not found┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobsNAME COMPLETIONSDURATIONAGEmy-job0/619s19s查看参数设置的变化,运行6个job

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNAME READYSTATUS RESTARTSAGEmy-job--1-9vvst0/1 Completed0 25smy-job--1-h24cw0/1 ContainerCreating0 5smy-job--1-jgq2j0/1 Completed0 24smy-job--1-mbmg60/1 ContainerCreating0 1s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobsNAME COMPLETIONSDURATIONAGEmy-job2/635s35s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobsNAME COMPLETIONSDURATIONAGEmy-job3/648s48s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$└─$kubectl get podsNAME READYSTATUS RESTARTSAGEmy-job--1-9vvst0/1 Completed0 91smy-job--1-b95qv0/1 Completed0 35smy-job--1-h24cw0/1 Completed0 71smy-job--1-jgq2j0/1 Completed0 90smy-job--1-mbmg60/1 Completed0 67smy-job--1-njbfj0/1 Completed0 49s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobsNAME COMPLETIONSDURATIONAGEmy-job6/676s93s 实战:计算圆周率2000位命令行的方式创建一个job

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl create job job3 --image=perl --dry-run=client -o yaml -- perl -Mbignum=bpi -wle 'print bpi(500)'apiVersion: batch/v1kind: Jobmetadata: creationTimestamp: null name: job3spec: template:metadata: creationTimestamp: nullspec: containers: - command:- perl- -Mbignum=bpi- -wle- print bpi(500)image: perlname: job3resources: {} restartPolicy: Neverstatus: {}拉取相关镜像,命令行创建job

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible node -m shell -a "docker pull perl"┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl create job job2 --image=perl -- perl -Mbignum=bpi -wle 'print bpi(500)'job.batch/job2 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNAMEREADYSTATUS RESTARTSAGEjob2--1-5jlbl0/1 Completed0 2m4s查看运行的job输出

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl logs job2--1-5jlbl3.1415926535897932384626433832795028841971693993751058209749445923078164062862089986280348253421170679821480865132823066470938446095505822317253594081284811174502841027019385211055596446229489549303819644288109756659334461284756482337867831652712019091456485669234603486104543266482133936072602491412737245870066063155881748815209209628292540917153643678925903600113305305488204665213841469519415116094330572703657595919530921861173819326117931051185480744623799627495673518857527248912279381830119491 Cronjob:定时任务在 cronjob 的 yaml 文件里的 .spec.jobTemplate.spec 字段里,可以写 activeDeadlineSeconds 参数,指定 cronjob 所生成的 pod 只能运行多久

Kubernetes从1.5版本开始增加了一种新类型的Job,即类似LinuxCron的定时任务Cron Job,下面看看如何定义和使用这种类型的Job首先,确保Kubernetes的版本为1.8及以上。

在Kubernetes 1.9版本后,kubectl命令增加了别名cj来表示cronjob,同时kubectl set image/env命令也可以作用在CronJob对象上了。

创建一个 Cronjob每分钟创建一个pod执行一个date命令

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl create cronjob test-job --image=busybox --schedule="*/1 * * * *" --dry-run=client-o yaml -- /bin/sh -c "date"apiVersion: batch/v1kind: CronJobmetadata: creationTimestamp: null name: test-jobspec: jobTemplate:metadata: creationTimestamp: null name: test-jobspec: template:metadata: creationTimestamp: nullspec: containers: - command:- /bin/sh- -c- dateimage: busyboxname: test-jobresources: {} restartPolicy: OnFailure schedule: '*/1 * * * *'status: {}可是使用yaml文件或者命令行的方式创建

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNo resources found in liruiling-job-create namespace.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl apply -f jobcron.yamlcronjob.batch/test-job configured┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobNAMECOMPLETIONSDURATIONAGEtest-job-273302460/10s 0s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNAME READYSTATUSRESTARTSAGEtest-job-27330246--1-xn5r61/1 Running0 4s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get podsNAME READYSTATUS RESTARTSAGEtest-job-27330246--1-xn5r60/1 Completed0 100stest-job-27330247--1-9blnp0/1 Completed0 40s运行--watch命令,可以更直观地了解Cron Job定期触发任务执行的历史和现状:

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl apply -f jobcron.yamlcronjob.batch/test-job created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get cronjobsNAMESCHEDULE SUSPENDACTIVELAST SCHEDULEAGEtest-job*/1 * * * *False 0 12s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobs --watchNAMECOMPLETIONSDURATIONAGEtest-job-273369170/1 0stest-job-273369170/10s 0stest-job-273369171/125s25stest-job-273369180/1 0stest-job-273369180/10s 0stest-job-273369181/126s26s^C┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$kubectl get jobs -o wideNAMECOMPLETIONSDURATIONAGECONTAINERSIMAGESSELECTORtest-job-273369171/125s105stest-job busyboxcontroller-uid=35e43bbc-5869-4bda-97db-c027e9a36b97test-job-273369181/126s45stest-job busyboxcontroller-uid=82d2e4a5-716c-42bf-bc7d-3137dd0e50e8┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create]└─$ ServiceService是Kubernetes的核心概念,可以为一组具有相同功能的容器应用提供一个统一的入口地址,并且通过多实例的方式将请求负载分发到后端的各个容器应用上。具体涉及service的负载均衡机制、如何访问Service、 Headless Service, DNS服务的机制和实践、Ingress 7层路由机制等。

我们这里以服务的创建,发布,发现三个角度来学习,偏实战,关于Headless Service, DNS服务的机制和实践、Ingress 7层路由机制等一些原理理论会在之后的博文里分享

通过Service的定义, Kubernetes实现了一种分布式应用统一入口的定义和负载均衡机制。Service还可以进行其他类型的设置,例如设置多个端口号、直接设置为集群外部服务,或实现为Headless Service (无头服务)模式.

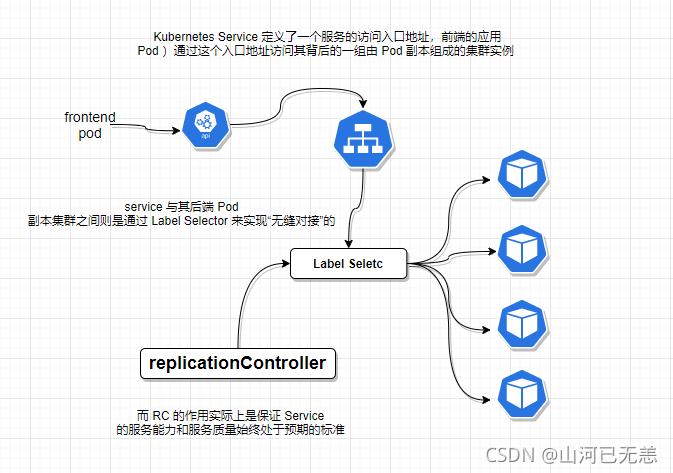

Kubernetes的Service定义了一个服务的访问入口地址,前端的应用(Pod)通过这个入口地址访问其背后的一组由Pod副本组成的集群实例, Service与其后端Pod副本集群之间则是通过Label Selector来实现“无缝对接”的。而RC或者deploy的作用实际上是保证Service的服务能力和服务质量始终处干预期的标准。

服务创建 为什么需要Service学习环境准备,新建一个liruilong-svc-create命名空间

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$d=k8s-svc-create┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$mkdir $d ;cd $d┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl config set-context $(kubectl config current-context) --namespace=liruilong-svc-createContext "kubernetes-admin@kubernetes" modified.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl create ns liruilong-svc-createnamespace/liruilong-svc-create created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svcNo resources found in liruilong-svc-create namespace.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$ 使用pod资源创建服务我们先来创建一个普通服务即不使用Service资源,只是通过pod创建

通过命令行的方式生成一个pod资源的yaml文件,然后我们修改一下

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl run pod-svc --image=nginx --image-pull-policy=IfNotPresent --dry-run=client -o yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: pod-svc name: pod-svcspec: containers: - image: nginximagePullPolicy: IfNotPresentname: pod-svcresources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}这里我们修改下,使当前的pod可以对外提供能力,使用的方式,通过设置容器级别的hostPort,将容器应用的端口号映射到宿主机上

ports:- containerPort: 80 # 容器端口 hostPort: 800 # 提供能力的端口通过宿主机映射,当pod发生调度后,节点没法提供能力

通过设置Pod级别的hostNetwork=true,该Pod中所有容器的端口号都将被直接映射到物理机上。 在设置hostNetwork=true时需要注意,在容器的ports定义部分如果不指定hostPort,则默认hostPort等于containerPort,如果指定了hostPort,则hostPort必须等于containerPort的值:

spec nostNetwork: true containers:name: webappimage: tomcat imagePullPolicy: Never ports:- containerPort: 8080通过下面的方式生成的pod可以通过800端口对外提供能力,这里的的IP为宿主机IP

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$cat pod-svc.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: pod-svc name: pod-svcspec: containers: - image: nginximagePullPolicy: IfNotPresentname: pod-svcports:- containerPort: 80 hostPort: 800resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$这是一个单独的pod资源,生成的pod基于当前Node对外提供能力

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl apply -f pod-svc.yamlpod/pod-svc created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods -owideNAME READYSTATUSRESTARTSAGEIP NODE NOMINATED NODEREADINESS GATESpod-svc1/1 Running0 3s10.244.70.50vms83.liruilongs.github.io对于pod-svc来讲,我们可以通过pod_ip+端口的方式访问,其实是类似于docker一样,把端口映射到宿主机的方式

然后我们以同样的方式生成几个新的pod,也是基于当前Node节点对外提供能力,这里我们只有两个节点,所以在生成第三个的时候,pod直接pending了,端口冲突,我们使用了宿主机映射的方式,所以每个node只能调度一个pop上去

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$sed 's/pod-svc/pod-svc-1/' pod-svc.yaml | kubectl apply -f -pod/pod-svc-1 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods -owideNAMEREADYSTATUSRESTARTSAGE IPNODE NOMINATED NODEREADINESS GATESpod-svc 1/1 Running0 2m46s10.244.70.50 vms83.liruilongs.github.io pod-svc-11/1 Running0 13s 10.244.171.176vms82.liruilongs.github.io ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$sed 's/pod-svc/pod-svc-2/' pod-svc.yaml | kubectl apply -f -pod/pod-svc-2 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods -owideNAMEREADYSTATUSRESTARTSAGE IPNODE NOMINATED NODEREADINESS GATESpod-svc 1/1 Running0 4m18s10.244.70.50 vms83.liruilongs.github.io pod-svc-11/1 Running0 105s10.244.171.176vms82.liruilongs.github.io pod-svc-20/1 Pending0 2s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$这个时候,如果我们想多创建几个pod来提供能力,亦或者做负载。就要用到Service了

Service的创建一般来说,对外提供服务的应用程序需要通过某种机制来实现,对于容器应用最简便的方式就是通过TCP/IP机制及监听IP和端口号来实现。即PodIP+容器端口的方式

直接通过Pod的IP地址和端口号可以访问到容器应用内的服务,但是Pod的IP地址是不可靠的,如果容器应用本身是分布式的部署方式,通过多个实例共同提供服务,就需要在这些实例的前端设置一个负载均衡器来实现请求的分发。

Kubernetes中的Service就是用于解决这些问题的核心组件。通过kubectl expose命令来创建Service

新创建的Service,系统为它分配了一个虚拟的IP地址(ClusterlP) , Service所需的端口号则从Pod中的containerPort复制而来:

下面我们就使用多个pod和deploy的方式为Service提供能力,创建Service

使用deployment创建SVC创建一个有三个ng副本的deployment

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl create deployment web1 --image=nginx --replicas=3 --dry-run=client -o yamlapiVersion: apps/v1kind: Deploymentmetadata: creationTimestamp: null labels:app: web1 name: web1spec: replicas: 3 selector:matchLabels: app: web1 strategy: {} template:metadata: creationTimestamp: null labels:app: web1spec: containers: - image: nginxname: nginxresources: {}status: {}┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl create deployment web1 --image=nginx --replicas=3 --dry-run=client -o yaml > web1.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods -o wideNo resources found in liruilong-svc-create namespace.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl apply -f web1.yamldeployment.apps/web1 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods -o wideNAMEREADYSTATUS RESTARTSAGEIPNODE NOMINATED NODEREADINESS GATESweb1-6fbb48567f-2zfkm0/1 ContainerCreating0 2svms83.liruilongs.github.io web1-6fbb48567f-krj4j0/1 ContainerCreating0 2svms83.liruilongs.github.io web1-6fbb48567f-mzvtk0/1 ContainerCreating0 2svms82.liruilongs.github.io ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$通过deploy: web1 为服务能力提供者,创建一个Servie服务

除了使用kubectl expose命令创建Service,我们也可以通过配置文件定义Service,再通过kubectl create命令进行创建

Service定义中的关键字段是ports和selector 。ports定义部分指定了Service所需的虚拟端口号为8081,如果与Pod容器端口号8080不一样,所以需要再通过targetPort来指定后端Pod的端口号。selector定义部分设置的是后端Pod所拥有的label:

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl expose --name=svc1 deployment web1 --port=80service/svc1 exposed┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svc -o wideNAMETYPECLUSTER-IP EXTERNAL-IPPORT(S) AGESELECTORsvc1ClusterIP10.110.53.14280/TCP23sapp=web1 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get podsNAMEREADYSTATUSRESTARTSAGEweb1-6fbb48567f-2zfkm1/1 Running0 14mweb1-6fbb48567f-krj4j1/1 Running0 14mweb1-6fbb48567f-mzvtk1/1 Running0 14m┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get ep -owideNAMEENDPOINTSAGEsvc110.244.171.177:80,10.244.70.60:80,10.244.70.61:8013m┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods --show-labelsNAMEREADYSTATUSRESTARTSAGELABELSweb1-6fbb48567f-2zfkm1/1 Running0 18mapp=web1,pod-template-hash=6fbb48567fweb1-6fbb48567f-krj4j1/1 Running0 18mapp=web1,pod-template-hash=6fbb48567fweb1-6fbb48567f-mzvtk1/1 Running0 18mapp=web1,pod-template-hash=6fbb48567f┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$ 使用pod创建Service每个Pod都会被分配一个单独的IP地址,而且每个Pod都提供了一个独立的Endpoint(Pod IP+ContainerPort)以被客户端访问,现在多个Pod副本组成了一个集群来提供服务.客户端如何来访问它们呢?一般的做法是部署一个负载均衡器(软件或硬件),

Kubernetes中运行在每个Node上的kube-proxy进程其实就是一个智能的软件负载均衡器,它负责把对Service的请求转发到后端的某个Pod实例上,并在内部实现服务的负载均衡与会话保持机制。资源文件定义

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$cat readiness-probe.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: pod-liveness name: pod-livenessspec: containers:image: nginximagePullPolicy: IfNotPresentname: pod-livenessresources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}创建3个有Ngixn的pod,通过POD创建一个SVC做测试用

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$sed 's/pod-liveness/pod-liveness-1/' readiness-probe.yaml | kubectl apply -f -pod/pod-liveness-1 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$sed 's/pod-liveness/pod-liveness-2/' readiness-probe.yaml | kubectl apply -f -pod/pod-liveness-2 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get pods -o wideNAME READYSTATUSRESTARTSAGEIP NODE NOMINATED NODEREADINESS GATESpod-liveness 1/1 Running0 3m1s10.244.70.50vms83.liruilongs.github.io pod-liveness-11/1 Running0 2m 10.244.70.51vms83.liruilongs.github.io pod-liveness-21/1 Running0 111s10.244.70.52vms83.liruilongs.github.io修改主页文字

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$serve=pod-liveness┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it $serve -- sh -c "cat /usr/share/nginx/html/index.html"pod-liveness┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$serve=pod-liveness-1┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$serve=pod-liveness-2┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$修改标签

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get pods --show-labelsNAME READYSTATUSRESTARTSAGELABELSpod-liveness 1/1 Running0 15mrun=pod-livenesspod-liveness-11/1 Running0 14mrun=pod-liveness-1pod-liveness-21/1 Running0 14mrun=pod-liveness-2┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl edit pods pod-liveness-1pod/pod-liveness-1 edited┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl edit pods pod-liveness-2pod/pod-liveness-2 edited┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get pods --show-labelsNAME READYSTATUSRESTARTSAGELABELSpod-liveness 1/1 Running0 17mrun=pod-livenesspod-liveness-11/1 Running0 16mrun=pod-livenesspod-liveness-21/1 Running0 16mrun=pod-liveness┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$使用POD创建SVC

┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl expose --name=svc pod pod-liveness --port=80service/svc exposed┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get epNAMEENDPOINTS AGEsvc10.244.70.50:80,10.244.70.51:80,10.244.70.52:8016s┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get svcNAMETYPECLUSTER-IPEXTERNAL-IPPORT(S)AGEsvcClusterIP10.104.246.12180/TCP36s┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe]└─$kubectl get pods -o wideNAME READYSTATUSRESTARTSAGEIP NODE NOMINATED NODEREADINESS GATESpod-liveness 1/1 Running0 24m10.244.70.50vms83.liruilongs.github.io pod-liveness-11/1 Running0 23m10.244.70.51vms83.liruilongs.github.io pod-liveness-21/1 Running0 23m10.244.70.52vms83.liruilongs.github.io测试SVC正常,三个POD会正常 负载

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$while true; do curl 10.104.246.121 ; sleep 1> donepod-livenesspod-liveness-2pod-livenesspod-liveness-1pod-liveness-2^C基于 ClusterlP 提供的两种负载分发策略

目前 Kubernetes 提供了两种负载分发策略:RoundRobin和SessionAffinity

负载分发策略描述RoundRobin轮询模式,即轮询将请求转发到后端的各个Pod上。SessionAffinity基于客户端IP地址进行会话保持的模式,在默认情况下, Kubernetes采用RoundRobin模式对客户端请求进行,负载分发,但我们也可以通过设置service.spec.sessionAffinity=ClientIP来启用SessionAffinity策略。

查看svc包含的Pod ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svc -owide | grep -v NAME | awk '{print $NF}' | xargs kubectl get pods -lNAMEREADYSTATUSRESTARTSAGEpod-svc 1/1 Running0 18mpod-svc-11/1 Running0 17mpod-svc-21/1 Running0 16m┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$ 端口的请求转发及多端口设置一个容器应用也可能提供多个端口的服务,那么在Service的定义中也可以相应地设置为将多个端口转发到多个应用服务。

Kubernetes Service支持多个Endpoint(端口),在存在多个Endpoint的情况下,要求每个Endpoint定义一个名字来区分。下面是Tomcat多端口的Service定义样例:

- port: 8080 targetPort: 80 name: web1- port: 8008 targetPort: 90 name: web2多端口为什么需要给每个端口命名呢?这就涉及Kubernetes的服务发现机制了(通过DNS是方式实现的服务发布)

命令行的方式

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl expose --name=svc pod pod-svc --port=808 --target-port=80 --selector=run=pod-svcservice/svc exposed┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svc -owideNAMETYPECLUSTER-IPEXTERNAL-IPPORT(S)AGESELECTORsvcClusterIP10.102.223.233808/TCP4srun=pod-svc┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kube-proxy的路由规则不同,ServiceIP的访问也不同

iptable: Service(CLUSTER-IP )地址 ping 不通ipvs: Service(CLUSTER-IP )地址可以ping通服务的发现所谓服务发现,就是我们在pod内部,或者说容器内部,怎么获取到要访问的服务的IP和端口。类似于微服务中的注册中心概念

Kubernetes 的服务发现机制区别最早时Kubernetes采用了Linux环境变量的方式解决这个问题,即每个Service生成一些对应的Linux环境变量(ENV),并在每个Pod的容器在启动时,自动注入这些环境变量命名空间隔离后来Kubernetes通过Add-On增值包的方式引入了DNS系统,把服务名作为DNS域名,这样一来,程序就可以直接使用服务名来建立通信连接了。目前Kubernetes上的大部分应用都已经采用了DNS这些新兴的服务发现机制命名空间可见环境准备,我们还是用之前的那个pod做的服务来处理

┌──[root@vms81.liruilongs.github.io]-[~]└─$kubectl get pods -owideNAMEREADYSTATUSRESTARTSAGEIPNODE NOMINATED NODEREADINESS GATESpod-svc 1/1 Running0 69m10.244.70.35 vms83.liruilongs.github.io pod-svc-11/1 Running0 68m10.244.70.39 vms83.liruilongs.github.io pod-svc-21/1 Running0 68m10.244.171.153vms82.liruilongs.github.io ┌──[root@vms81.liruilongs.github.io]-[~]└─$s=pod-svc┌──[root@vms81.liruilongs.github.io]-[~]└─$kubectl exec -it $s -- sh -c "echo $s > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~]└─$s=pod-svc-1┌──[root@vms81.liruilongs.github.io]-[~]└─$kubectl exec -it $s -- sh -c "echo $s > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~]└─$s=pod-svc-2┌──[root@vms81.liruilongs.github.io]-[~]└─$kubectl exec -it $s -- sh -c "echo $s > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~]└─$kubectl get svc -owideNAMETYPECLUSTER-IPEXTERNAL-IPPORT(S)AGESELECTORsvcClusterIP10.102.223.233808/TCP46mrun=pod-svc┌──[root@vms81.liruilongs.github.io]-[~]└─$while true ;do curl 10.102.223.233:808;sleep 2 ; donepod-svc-2pod-svc-1pod-svcpod-svcpod-svcpod-svc-2^C┌──[root@vms81.liruilongs.github.io]-[~]└─$测试镜像准备

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible node -m shell -a "docker pull yauritux/busybox-curl" 通过Linux环境变量方式发现:命名空间隔离在每个创建的pod里会存在已经存在的SVC的变量信息,这些变量信息基于命名空间隔离,其他命名空间没有

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl run testpod -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent -n defaultIf you don't see a command prompt, try pressing enter./home # env | grep ^SVC/home #只存在当前命名空间,只能获取相同namespace里的变量

换句话的意思,在相同的命名空间里,我们可以在容器里通过变量的方式获取已经存在的Service来提供能力

┌──[root@vms81.liruilongs.github.io]-[~]└─$kubectl run testpod -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresentIf you don't see a command prompt, try pressing enter./home # env | grep ^SVCSVC_PORT_808_TCP_ADDR=10.102.223.233SVC_PORT_808_TCP_PORT=808SVC_PORT_808_TCP_PROTO=tcpSVC_SERVICE_HOST=10.102.223.233SVC_PORT_808_TCP=tcp://10.102.223.233:808SVC_SERVICE_PORT=808SVC_PORT=tcp://10.102.223.233:808/home #/home # while true ;do curl $SVC_SERVICE_HOST:$SVC_PORT_808_TCP_PORT ;sleep 2 ; donepod-svc-2pod-svc-2pod-svcpod-svc^C/home # 通过DNS的方式发现:命名空间可见Kubernetes发明了一种很巧妙又影响深远的设计:

Service不是共用一个负载均衡器的IP地址,而是每个Service分配了一个全局唯一的虚拟IP地址,这个虚拟IP被称为Cluster IP,这样一来,每个服务就变成了具备唯一IP地址的“通信节点”,服务调用就变成了最基础的TCP网络通信问题。

Service一旦被创建, Kubernetes就会自动为它分配一个可用的Cluster IP,而且在Service的整个生命周期内,它的Cluster IP不会发生改变。于是,服务发现这个棘手的问题在Kubernetes的架构里也得以轻松解决:只要用Service的Name与Service的Cluster IP地址做一个DNS域名映射即可完美解决问题。

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl get svc -n kube-system -owideNAME TYPECLUSTER-IPEXTERNAL-IPPORT(S) AGE SELECTORkube-dns ClusterIP10.96.0.1053/UDP,53/TCP,9153/TCP6d20hk8s-app=kube-dnsmetrics-serverClusterIP10.111.104.173443/TCP 6d18hk8s-app=metrics-server┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl get pods -n kube-system -l k8s-app=kube-dnsNAMEREADYSTATUSRESTARTS AGEcoredns-7f6cbbb7b8-ncd2s1/1 Running2 (23h ago)3d22hcoredns-7f6cbbb7b8-pjnct1/1 Running2 (23h ago)3d22h有个这个DNS服务之后,创建的每个SVC就会自动的注册一个DNS

┌──[root@vms81.liruilongs.github.io]-[~]└─$kubectl run testpod -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresentIf you don't see a command prompt, try pressing enter./home # cat /etc/resolv.confnameserver 10.96.0.10search liruilong-svc-create.svc.cluster.local svc.cluster.local cluster.local localdomain 168.26.131options ndots:5/home #在kube-system里有dns,可以自动发现所有命名空间里的服务的clusterIP,所以,在同一个命名空间里,一个服务访问另外一个服务的时候,可以直接通过服务名来访问,只要创建了一个服务(不管在哪个ns里创建的),都会自动向kube-system里的DNS注册如果是不同的命名空间,可以通过服务名.命名空间名 来访问`服务名.命名空间

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl config view | grep namespnamespace: liruilong-svc-create┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$我们这其他的命名空间里创建的一pod来访问当前空间的提供的服务能力

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl run testpod -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent -n defaultIf you don't see a command prompt, try pressing enter.'/home # curl svc.liruilong-svc-create:808pod-svc-2/home # 通过ClusterIP 实现这是一种相对来说,简单的方法,即直接通过 ClusterIP 来访问服务能力,同时支持跨命名空间不同命名空间的测试pod

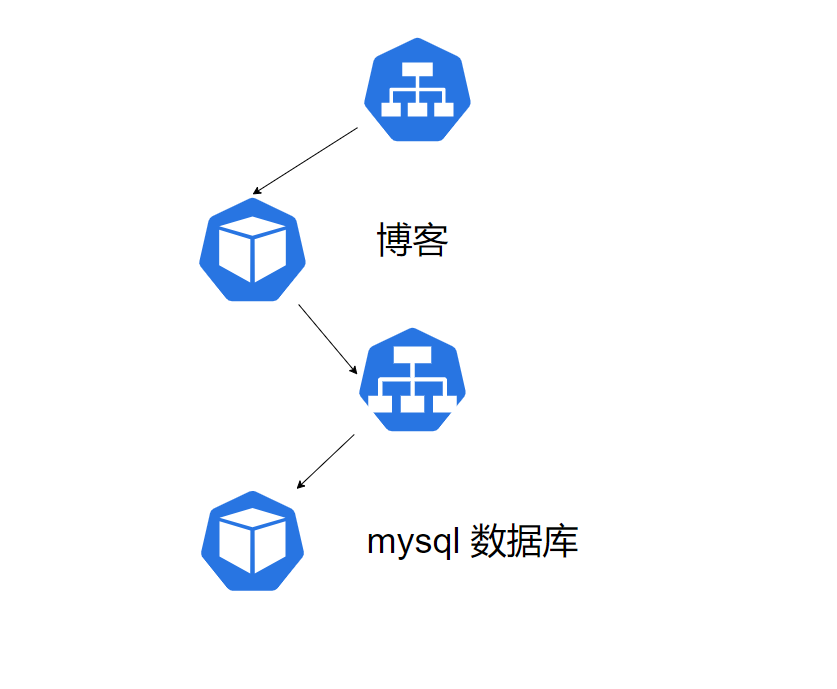

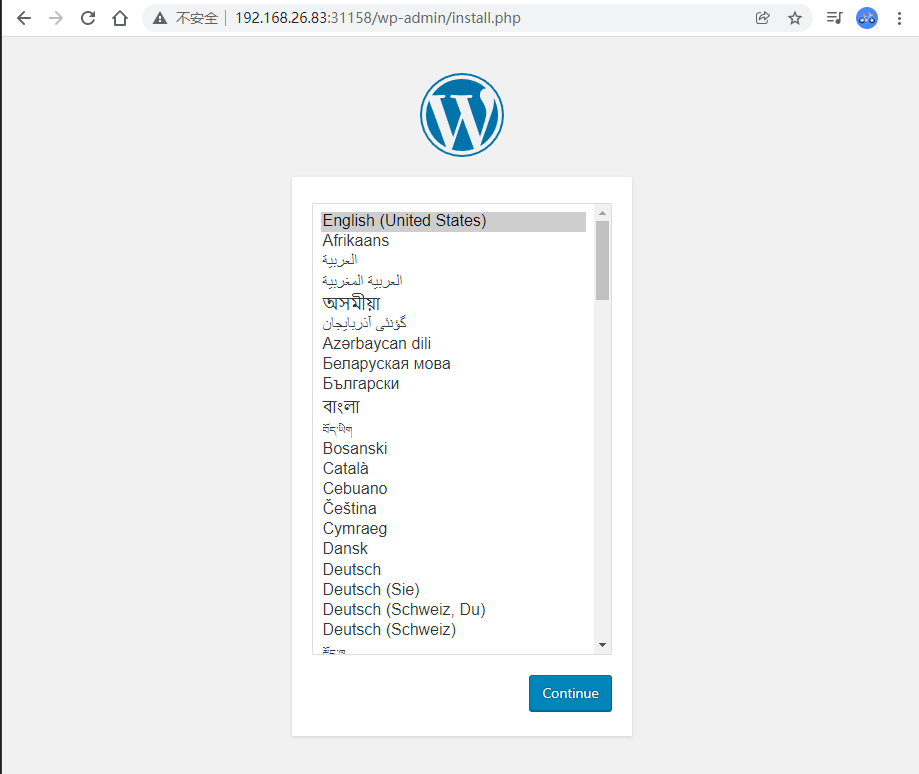

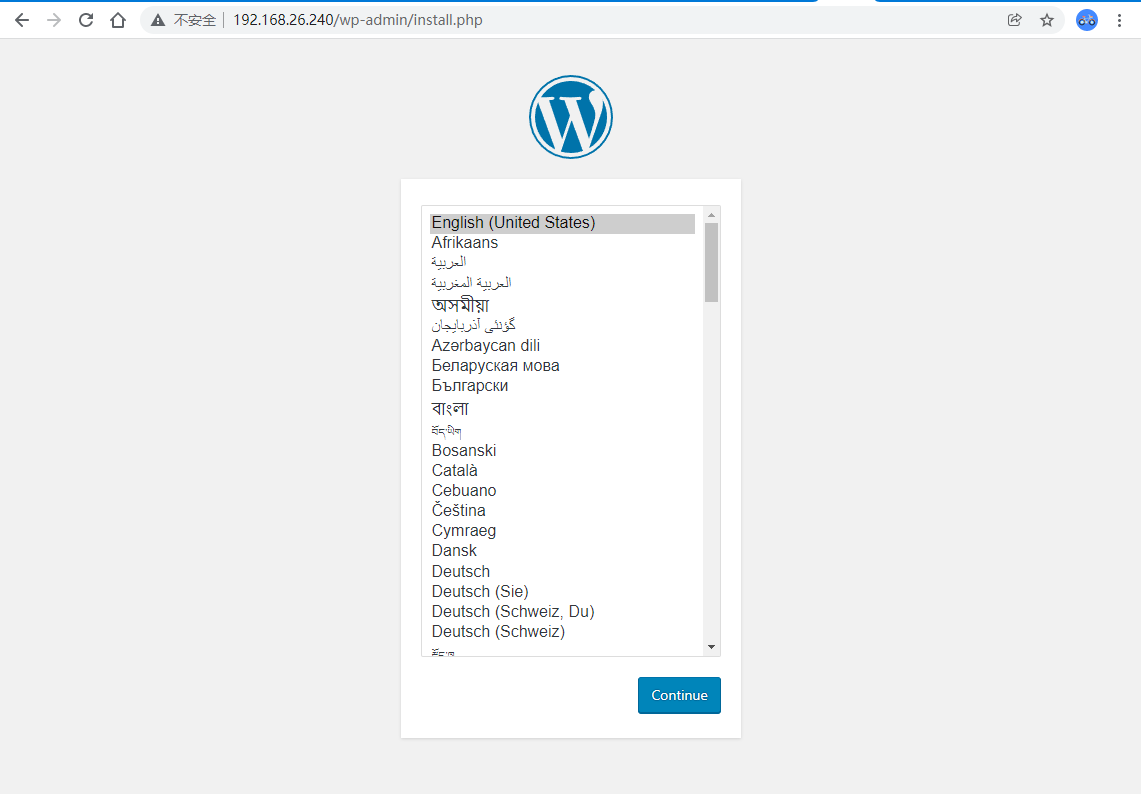

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl run testpod -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent -n defaultIf you don't see a command prompt, try pressing enter./home # while true ;do curl 10.102.223.233:808;sleep 2 ; donepod-svcpod-svc-1pod-svcpod-svc-2pod-svc^C/home # 实战WordPress博客搭建 WordPress博客搭建

环境准备,没有的需要安装

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible node -m shell -a "docker images | grep mysql"192.168.26.82 | CHANGED | rc=0 >>mysql latestecac195d15af2 months ago516MBmysql 9da615fced532 months ago514MBhub.c.163.com/library/mysqllatest9e64176cd8a24 years ago 407MB192.168.26.83 | CHANGED | rc=0 >>mysql latestecac195d15af2 months ago516MBmysql 9da615fced532 months ago514MBhub.c.163.com/library/mysqllatest9e64176cd8a24 years ago 407MB┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible node -m shell -a "docker images | grep wordpress"192.168.26.82 | CHANGED | rc=0 >>hub.c.163.com/library/wordpresslatestdccaeccfba364 years ago 406MB192.168.26.83 | CHANGED | rc=0 >>hub.c.163.com/library/wordpresslatestdccaeccfba364 years ago 406MB┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$创建一个mysql数据库pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$cat db-pod-mysql.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: dbpod name: dbpodspec: containers: - image: hub.c.163.com/library/mysqlimagePullPolicy: IfNotPresentname: dbpodresources: {}env:- name: MYSQL_ROOT_PASSWORD value: liruilong- name: MYSQL_USER value: root- name: MYSQL_DATABASE value: blog dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {} ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl apply -f db-pod-mysql.yamlpod/dbpod created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get podsNAMEREADYSTATUSRESTARTSAGEdbpod1/1 Running0 5s创建一个连接mysql-pod的Service,也可以理解为发布mysql服务,默认使用ClusterIP的方式

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods --show-labelsNAMEREADYSTATUSRESTARTSAGELABELSdbpod1/1 Running0 80srun=dbpod┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl expose --name=dbsvc pod dbpod --port=3306service/dbsvc exposed┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svc dbsvc -o yamlapiVersion: v1kind: Servicemetadata: creationTimestamp: "2021-12-21T15:31:19Z" labels:run: dbpod name: dbsvc namespace: liruilong-svc-create resourceVersion: "310763" uid: 05ccb22d-19c4-443a-ba86-f17d63159144spec: clusterIP: 10.102.137.59 clusterIPs: - 10.102.137.59 internalTrafficPolicy: Cluster ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - port: 3306protocol: TCPtargetPort: 3306 selector:run: dbpod sessionAffinity: None type: ClusterIPstatus: loadBalancer: {}创建一个WordPress博客的pod

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svcNAME TYPECLUSTER-IP EXTERNAL-IPPORT(S)AGEdbsvc ClusterIP10.102.137.593306/TCP3m12s┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$cat blog-pod.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: blog name: blogspec: containers: - image: hub.c.163.com/library/wordpressimagePullPolicy: IfNotPresentname: blogresources: {}env:- name: WORDPRESS_DB_USER value: root- name: WORDPRESS_DB_PASSWORD value: liruilong- name: WORDPRESS_DB_NAME value: blog- name: WORDPRESS_DB_HOST #value: $(MYSQL_SERVICE_HOST) value: 10.102.137.59 dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}创建一个发布博客服务的SVC

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl expose --name=blogsvc pod blog --port=80 --type=NodePort┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svc blogsvc -o yamlapiVersion: v1kind: Servicemetadata: creationTimestamp: "2021-12-20T17:11:03Z" labels:run: blog name: blogsvc namespace: liruilong-svc-create resourceVersion: "294057" uid: 4d350715-0210-441d-9c55-af0f31b7a090spec: clusterIP: 10.110.28.191 clusterIPs: - 10.110.28.191 externalTrafficPolicy: Cluster internalTrafficPolicy: Cluster ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - nodePort: 31158port: 80protocol: TCPtargetPort: 80 selector:run: blog sessionAffinity: None type: NodePortstatus: loadBalancer: {}查看服务状态测试

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svc -o wideNAME TYPECLUSTER-IP EXTERNAL-IPPORT(S)AGESELECTORblogsvcNodePort10.110.28.19180:31158/TCP22hrun=blogdbsvc ClusterIP10.102.137.593306/TCP15mrun=dbpod┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods -o wideNAMEREADYSTATUSRESTARTSAGEIPNODE NOMINATED NODEREADINESS GATESblog1/1 Running0 14m10.244.171.159vms82.liruilongs.github.io dbpod1/1 Running0 21m10.244.171.163vms82.liruilongs.github.io ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$ 访问

这里的话,在同一个命名空间里。所以可以使用变量来读取数据库所发布服务的ServiceIP

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl exec -it blog -- bashroot@blog:/var/www/html# env | grep DBSVCDBSVC_PORT_3306_TCP_ADDR=10.102.137.59DBSVC_SERVICE_PORT=3306DBSVC_PORT_3306_TCP_PORT=3306DBSVC_PORT_3306_TCP=tcp://10.102.137.59:3306DBSVC_SERVICE_HOST=10.102.137.59DBSVC_PORT=tcp://10.102.137.59:3306DBSVC_PORT_3306_TCP_PROTO=tcproot@blog:/var/www/html#即博客的pod中也可以这样配置

env:- name: WORDPRESS_DB_USER value: root- name: WORDPRESS_DB_PASSWORD value: liruilong- name: WORDPRESS_DB_NAME value: blog- name: WORDPRESS_DB_HOST value: $(DBSVC_SERVICE_HOST) #value: 10.102.137.59或者这样

env:- name: WORDPRESS_DB_USER value: root- name: WORDPRESS_DB_PASSWORD value: liruilong- name: WORDPRESS_DB_NAME value: blog- name: WORDPRESS_DB_HOST value: dbsvc.liruilong-svc-create ##value: 10.102.137.59 服务的发布所谓发布指的是,如何让集群之外的主机能访问服务

Kubernetes里的“三种IP"描述Node IPNode 节点的IP地址,Node IP是Kubernetes集群中每个节点的物理网卡的IP地址,这是一个真实存在的物理网络,所有属于这个网络的服务器之间都能通过这个网络直接通信,不管它们中是否有部分节点不属于这个Kubernetes集群。这也表明了Kubernetes集群之外的节点访问Kubernetes集群之内的某个节点或者TCP/IP服务时,必须要通过Node IP进行通信。Pod IPPod 的 IP 地址:Pod IP是每个Pod的IP地址,它是Docker Engine根据dockero网桥的IP地址段进行分配的,通常是一个虚拟的二层网络,前面我们说过, Kubernetes要求位于不同Node上的Pod能够彼此直接通信,所以Kubernetes里一个Pod里的容器访问另外一个Pod里的容器,就是通过Pod IP所在的虚拟二层网络进行通信的,而真实的TCP/IP流量则是通过Node IP所在的物理网卡流出的。Cluster IPService 的IP地址,Cluster IP仅仅作用于Kubernetes Service这个对象,并由Kubernetes管理和分配IP地址(来源于Cluster IP地址池)。Cluster IP无法被Ping,因为没有一个“实体网络对象”来响应。Cluster IP只能结合Service Port组成一个具体的通信端口,单独的Cluster IP不具备TCP/IP通信的基础,并且它们属于Kubernetes集群这样一个封闭的空间,集群之外的节点如果要访问这个通信端口,则需要做一些额外的工作。在Kubernetes集群之内, Node IP网、Pod IP网与Cluster IP网之间的通信,采用的是Kubermetes自己设计的一种编程方式的特殊的路由规则,与我们所熟知的IP路由有很大的不同。外部系统访问 Service,采用NodePort是解决上述问题的最直接、最有效、最常用的做法。具体做法在Service的定义里做如下扩展即可:

...spec: type: NodePort posts:- port: 8080 nodePort: 31002 selector:tier: frontend...即这里我们可以通过nodePort:31002 来访问Service,NodePort的实现方式是在Kubernetes集群里的每个Node上为需要外部访问的Service开启个对应的TCP监听端口,外部系统只要用任意一个Node的IP地址+具体的NodePort端口即可访问此服务,在任意Node上运行netstat命令,我们就可以看到有NodePort端口被监听:

下面我们具体看下实际案例

NodePort方式 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl expose --name=blogsvc pod blog --port=80 --type=NodePort apiVersion: v1kind: Servicemetadata: creationTimestamp: "2021-12-20T17:11:03Z" labels:run: blog name: blogsvc namespace: liruilong-svc-create resourceVersion: "294057" uid: 4d350715-0210-441d-9c55-af0f31b7a090spec: clusterIP: 10.110.28.191 clusterIPs: - 10.110.28.191 externalTrafficPolicy: Cluster internalTrafficPolicy: Cluster ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - nodePort: 31158port: 80protocol: TCPtargetPort: 80 selector:run: blog sessionAffinity: None type: NodePortstatus: loadBalancer: {}即我们前面的几个都是通过NodePort来服务映射,对所以工作节点映射,所以节点都可以访问,即外部通过节点IP+31158的形式访问,确定当服务太多时,端口不好维护

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svcNAME TYPECLUSTER-IP EXTERNAL-IPPORT(S)AGEblogsvcNodePort10.110.28.19180:31158/TCP23hdbsvc ClusterIP10.102.137.593306/TCP49m┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$ hostPort方式hostPort 容器映射,只能在pod所在节点映射到宿主机,这种一般不建议使用,当然静态节点觉得可以

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$cat pod-svc.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: pod-svc name: pod-svcspec: containers: - image: nginximagePullPolicy: IfNotPresentname: pod-svcports:- containerPort: 80 hostPort: 800resources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods -o wideNAME READYSTATUSRESTARTSAGEIPNODE NOMINATED NODEREADINESS GATESpod-svc1/1 Running0 69s10.244.171.172vms82.liruilongs.github.io修改Service类型为ClusterIP ,从1.20开始可以直接修改,之前的版本需要删除nodepost

LoadBalancer方式Service 负载均衡问题

NodePort还没有完全解决外部访问Service的所有问题,比如负载均衡问题,假如我们的集群中有10个Node,则此时最好有一个负载均衡器,外部的请求只需访问此负载均衡器的IP地址,由负载均衡器负责转发流量到后面某个Node的NodePort上。如图

NodePort的负载均衡 Load balancer组件独立于Kubernetes集群之外,通常是一个硬件的负载均衡器,或者是以软件方式实现的,例如HAProxy或者Nginx。对于每个Service,我们通常需要配置一个对应的Load balancer实例来转发流量到后端的Node上Kubernetes提供了自动化的解决方案,如果我们的集群运行在谷歌的GCE公有云上,那么只要我们把Service的type-NodePort改为type-LoadBalancer,此时Kubernetes会自动创建一个对应的Load balancer实例并返回它的IP地址供外部客户端使用。当让我们也可以用一些插件来实现,如metallb等

Load balancer组件独立于Kubernetes集群之外,通常是一个硬件的负载均衡器,或者是以软件方式实现的,例如HAProxy或者Nginx。对于每个Service,我们通常需要配置一个对应的Load balancer实例来转发流量到后端的Node上Kubernetes提供了自动化的解决方案,如果我们的集群运行在谷歌的GCE公有云上,那么只要我们把Service的type-NodePort改为type-LoadBalancer,此时Kubernetes会自动创建一个对应的Load balancer实例并返回它的IP地址供外部客户端使用。当让我们也可以用一些插件来实现,如metallb等 LoadBalancer 需要建立服务之外的负载池。然后给Service分配一个IP。

我们直接创建一个LoadBalancer的Service的时候,会一直处于pending状态,是因为我们没有对应的云负载均衡器

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl expose --name=blogsvc pod blog --port=80 --type=LoadBalancerservice/blogsvc exposed┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svc -o wide | grep blogsvcblogsvcLoadBalancer10.106.28.17580:32745/TCP26srun=blog┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$Metallb可以通过k8s原生的方式提供LB类型的Service支持

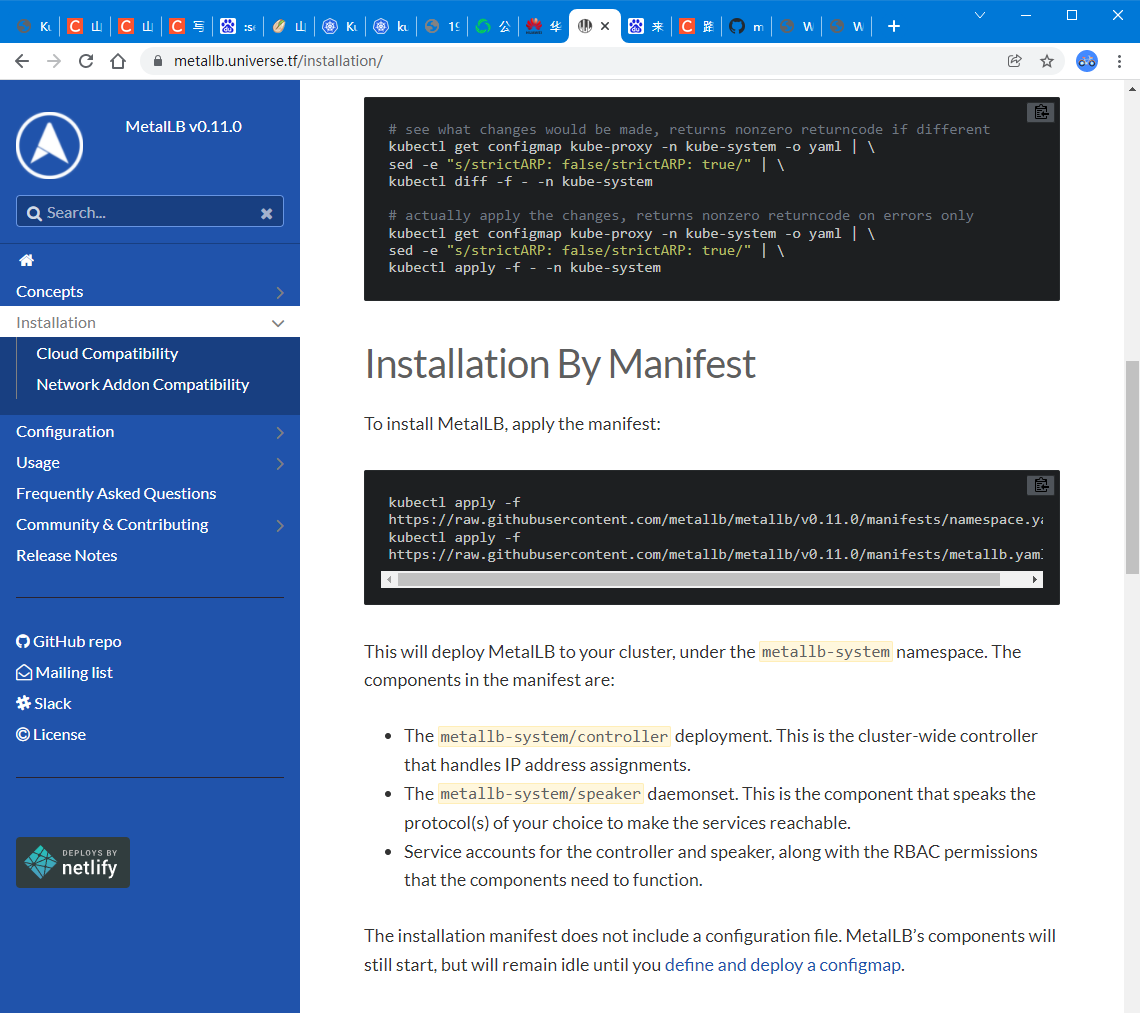

使用: metallb https://metallb.universe.tf/ 资源文件https://github.com/metallb/metallb/blob/main/manifests/metallb.yaml

资源文件https://github.com/metallb/metallb/blob/main/manifests/metallb.yaml 创建命名空间

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl create ns metallb-systemnamespace/metallb-system created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl config set-context $(kubectl config current-context) --namespace=metallb-systemContext "kubernetes-admin@kubernetes" modified.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$:set paste 解决粘贴混乱的问题创建metallb

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl apply -f metallb.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl get pods -o wideNAME READYSTATUSRESTARTSAGE IPNODE NOMINATED NODEREADINESS GATEScontroller-66d9554cc-8rxq81/1 Running0 3m36s10.244.171.170vms82.liruilongs.github.io speaker-bbl941/1 Running0 3m36s192.168.26.83vms83.liruilongs.github.io speaker-ckbzj1/1 Running0 3m36s192.168.26.81vms81.liruilongs.github.io speaker-djmpr1/1 Running0 3m36s192.168.26.82vms82.liruilongs.github.io ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$创建地址池

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$vim pool.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl apply -f pool.yamlconfigmap/config created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$cat pool.yamlapiVersion: v1kind: ConfigMapmetadata: namespace: metallb-system name: configdata: config: |address-pools:- name: default protocol: layer2 addresses: - 192.168.26.240-192.168.26.250┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$使用type=LoadBalancer的配置通过metallb分配192.168.26.240这个地址给blogsvc

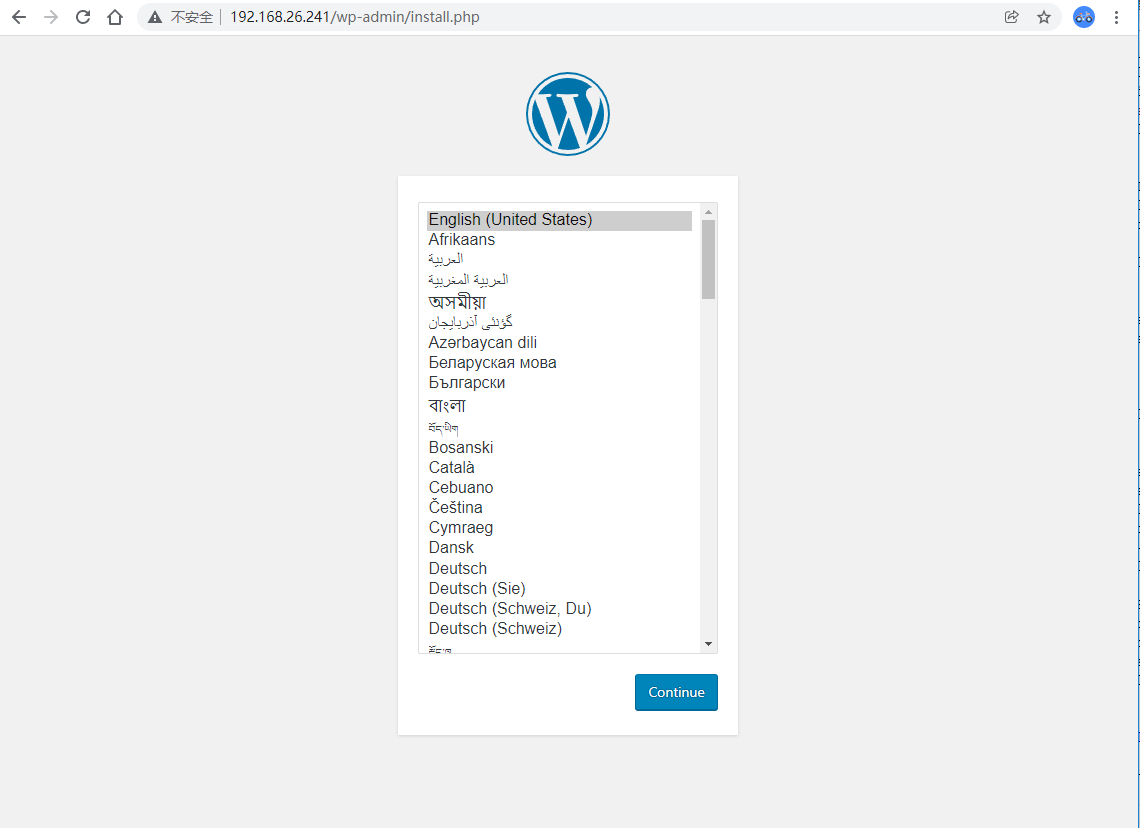

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl get svcNo resources found in metallb-system namespace.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl config set-context $(kubectl config current-context) --namespace=liruilong-svc-createContext "kubernetes-admin@kubernetes" modified.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl get svcNAMETYPECLUSTER-IP EXTERNAL-IPPORT(S)AGEdbsvcClusterIP10.102.137.593306/TCP101m┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl expose --name=blogsvc pod blog --port=80 --type=LoadBalancerservice/blogsvc exposed┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl get svc -o wideNAME TYPECLUSTER-IPEXTERNAL-IP PORT(S)AGESELECTORblogsvcLoadBalancer10.108.117.197192.168.26.24080:30230/TCP9s run=blogdbsvc ClusterIP 10.102.137.593306/TCP101mrun=dbpod 直接访问192.168.26.240就可以了

在创建一个也可以访问

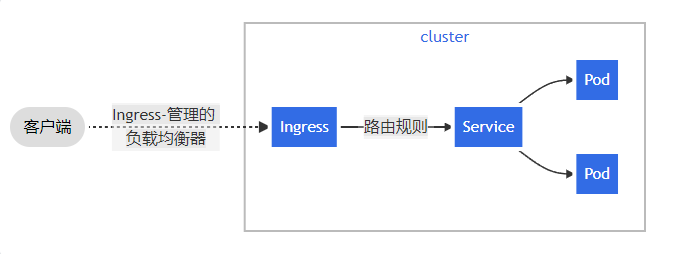

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl expose --name=blogsvc-1 pod blog --port=80 --type=LoadBalancerservice/blogsvc-1 exposed┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$kubectl get svc -o wideNAMETYPECLUSTER-IPEXTERNAL-IP PORT(S)AGESELECTORblogsvc LoadBalancer10.108.117.197192.168.26.24080:30230/TCP11mrun=blogblogsvc-1LoadBalancer10.110.58.143192.168.26.24180:31827/TCP3s run=blogdbsvcClusterIP 10.102.137.593306/TCP113mrun=dbpod┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create/metalld]└─$ 也可以访问 ingress方式(推荐) IngressIngress 是对集群中服务的外部访问进行管理的 API 对象,典型的访问方式是 HTTP。Ingress 可以提供负载均衡、SSL 终结和基于名称的虚拟托管。Ingress 公开了从集群外部到集群内服务的 HTTP 和 HTTPS 路由。 流量路由由 Ingress 资源上定义的规则控制。个人理解,就是实现了一个Ngixn功能,可以更具路由规则分配流量等

ingress方式(推荐) IngressIngress 是对集群中服务的外部访问进行管理的 API 对象,典型的访问方式是 HTTP。Ingress 可以提供负载均衡、SSL 终结和基于名称的虚拟托管。Ingress 公开了从集群外部到集群内服务的 HTTP 和 HTTPS 路由。 流量路由由 Ingress 资源上定义的规则控制。个人理解,就是实现了一个Ngixn功能,可以更具路由规则分配流量等 命名空间里配置ingress规则,嵌入到控制器nginx-反向代理的方式(ingress-nginx-controller)可以将 Ingress 配置为服务提供外部可访问的 URL、负载均衡流量、终止 SSL/TLS,以及提供基于名称的虚拟主机等能力。 Ingress 控制器 通常负责通过负载均衡器来实现 Ingress,尽管它也可以配置边缘路由器或其他前端来帮助处理流量。Ingress 不会公开任意端口或协议。 将 HTTP 和 HTTPS 以外的服务公开到 Internet 时,通常使用 Service.Type=NodePort 或 Service.Type=LoadBalancer 类型的服务 ingress-nginx-controller 部署

命名空间里配置ingress规则,嵌入到控制器nginx-反向代理的方式(ingress-nginx-controller)可以将 Ingress 配置为服务提供外部可访问的 URL、负载均衡流量、终止 SSL/TLS,以及提供基于名称的虚拟主机等能力。 Ingress 控制器 通常负责通过负载均衡器来实现 Ingress,尽管它也可以配置边缘路由器或其他前端来帮助处理流量。Ingress 不会公开任意端口或协议。 将 HTTP 和 HTTPS 以外的服务公开到 Internet 时,通常使用 Service.Type=NodePort 或 Service.Type=LoadBalancer 类型的服务 ingress-nginx-controller 部署 需要的镜像

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$grep image nginx-controller.yaml image: docker.io/liangjw/ingress-nginx-controller:v1.0.1 imagePullPolicy: IfNotPresent image: docker.io/liangjw/kube-webhook-certgen:v1.1.1 imagePullPolicy: IfNotPresent image: docker.io/liangjw/kube-webhook-certgen:v1.1.1 imagePullPolicy: IfNotPresent┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$准备工作,镜像上传,导入

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible node -m copy -a "dest=/root/ src=./../ingress-nginx-controller-img.tar"192.168.26.82 | CHANGED => {"ansible_facts": {"discovered_interpreter_python": "/usr/bin/python"},"changed": true,"checksum": "a3c2f87fd640c0bfecebeab24369c7ca8d6f0fa0","dest": "/root/ingress-nginx-controller-img.tar","gid": 0,"group": "root","md5sum": "d5bf7924cb3c61104f7a07189a2e6ebd","mode": "0644","owner": "root","size": 334879744,"src": "/root/.ansible/tmp/ansible-tmp-1640207772.53-9140-99388332454846/source","state": "file","uid": 0}192.168.26.83 | CHANGED => {"ansible_facts": {"discovered_interpreter_python": "/usr/bin/python"},"changed": true,"checksum": "a3c2f87fd640c0bfecebeab24369c7ca8d6f0fa0","dest": "/root/ingress-nginx-controller-img.tar","gid": 0,"group": "root","md5sum": "d5bf7924cb3c61104f7a07189a2e6ebd","mode": "0644","owner": "root","size": 334879744,"src": "/root/.ansible/tmp/ansible-tmp-1640207772.55-9142-78097462005167/source","state": "file","uid": 0}┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible node -m shell -a "docker load -i /root/ingress-nginx-controller-img.tar"创建ingress控制器ingress-nginx-controller

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl apply -f nginx-controller.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods -n ingress-nginx -o wideNAMEREADYSTATUS RESTARTSAGEIPNODE NOMINATED NODEREADINESS GATESingress-nginx-admission-create--1-hvvxd 0/1 Completed0 89s10.244.171.171vms82.liruilongs.github.io ingress-nginx-admission-patch--1-g4ffs 0/1 Completed0 89s10.244.70.7 vms83.liruilongs.github.io ingress-nginx-controller-744d4fc6b7-7fcfj1/1 Running 0 90s192.168.26.83vms83.liruilongs.github.io ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$配置DNS 创建域名到服务的映射

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m shell -a "echo -e '192.168.26.83 liruilongs.nginx1\n192.168.26.83 liruilongs.nginx2\n192.168.26.83 liruilongs.nginx3' >> /etc/hosts"192.168.26.83 | CHANGED | rc=0 >>┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m shell -a "cat /etc/hosts"192.168.26.83 | CHANGED | rc=0 >>127.0.0.1localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.26.81 vms81.liruilongs.github.io vms81192.168.26.82 vms82.liruilongs.github.io vms82192.168.26.83 vms83.liruilongs.github.io vms83192.168.26.83 liruilongs.nginx1192.168.26.83 liruilongs.nginx2192.168.26.83 liruilongs.nginx3┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$服务模拟,创建三个pod做服务

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$cat pod.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels:run: pod-svc name: pod-svcspec: containers: - image: nginximagePullPolicy: IfNotPresentname: pod-svcresources: {} dnsPolicy: ClusterFirst restartPolicy: Alwaysstatus: {}┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl apply -f pod.yamlpod/pod-svc created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$sed 's/pod-svc/pod-svc-1/' pod.yaml > pod-1.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$sed 's/pod-svc/pod-svc-2/' pod.yaml > pod-2.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl apply -f pod-1.yamlpod/pod-svc-1 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl apply -f pod-2.yamlpod/pod-svc-2 created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods -o wideNAMEREADYSTATUSRESTARTSAGE IPNODE NOMINATED NODEREADINESS GATESpod-svc 1/1 Running0 2m42s10.244.171.174vms82.liruilongs.github.io pod-svc-11/1 Running0 80s 10.244.171.175vms82.liruilongs.github.io pod-svc-21/1 Running0 70s 10.244.171.176vms82.liruilongs.github.io ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$修改Nginx的主页,根据pod创建三个服务SVC

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get pods --show-labelsNAMEREADYSTATUSRESTARTSAGELABELSpod-svc 1/1 Running0 3m7srun=pod-svcpod-svc-11/1 Running0 105srun=pod-svc-1pod-svc-21/1 Running0 95srun=pod-svc-2┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$serve=pod-svc┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl expose --name=$serve-svc pod $serve --port=80service/pod-svc-svc exposed ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$serve=pod-svc-1┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl expose --name=$serve-svc pod $serve --port=80service/pod-svc-1-svc exposed ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$serve=pod-svc-2┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html"┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl expose --name=$serve-svc pod $serve --port=80service/pod-svc-2-svc exposed创建了三个SVC做负载模拟

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get svc -o wideNAMETYPECLUSTER-IP EXTERNAL-IPPORT(S)AGESELECTORpod-svc-1-svcClusterIP10.99.80.12180/TCP94srun=pod-svc-1pod-svc-2-svcClusterIP10.110.40.3080/TCP107srun=pod-svc-2pod-svc-svc ClusterIP10.96.152.580/TCP85srun=pod-svc┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$ ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get ingNo resources found in liruilong-svc-create namespace.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$vim ingress.yaml创建 Ingress,当然这里只是简单测试,可以更具具体业务情况配置复杂的路由策略ingress.yaml

apiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: my-ingress annotations:kubernetes.io/ingress.class: "nginx" #必须要加spec: rules: - host: liruilongs.nginx1http: paths: - path: /pathType: Prefixbackend: service:name: pod-svc-svcport: number: 80 - host: liruilongs.nginx2http: paths: - path: /pathType: Prefixbackend: service:name: pod-svc-1-svcport: number: 80 - host: liruilongs.nginx3http: paths: - path: /pathType: Prefixbackend: service:name: pod-svc-2-svcport: number: 80 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl apply -f ingress.yamlingress.networking.k8s.io/my-ingress created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$kubectl get ingNAME CLASSHOSTSADDRESSPORTSAGEmy-ingress liruilongs.nginx1,liruilongs.nginx2,liruilongs.nginx3 80 17s负载测试

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m shell -a "curl liruilongs.nginx1"192.168.26.83 | CHANGED | rc=0 >>pod-svc ┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m shell -a "curl liruilongs.nginx2"192.168.26.83 | CHANGED | rc=0 >>pod-svc-1DNS解析的地址为控制器的地址,这里控制器使用的是docker内部网络的方式,即直接把端口映射宿主机了

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-svc-create]└─$grep -i hostN nginx-controller.yaml hostNetwork: true